Understanding Stereo Camera Technology for Depth Perception in Imaging and Robotics

Depth perception allows machines to interpret the real world by determining how far objects are located in three-dimensional space. One of the most effective technologies enabling this capability is the stereo camera, which uses two lenses to capture spatial information and calculate depth without relying on artificial light.

There are multiple approaches to perceiving depth, typically grouped into passive and active methods. Passive systems, like stereo cameras, rely on natural environmental light and visual data from camera pairs to estimate depth through triangulation. Active systems, on the other hand, project artificial light—such as infrared (IR) or laser patterns—to interact with surfaces and measure distance. Some advanced solutions even combine both approaches, using hybrid methods to improve performance in specific imaging and robotics applications.

Types of Depth Perception Technologies

Although there are several methods to measure depth, the most popular tools are Stereo Cameras, Time-of-Flight (ToF) sensors, and LiDAR.

Stereo Vision

Stereo Cameras use a passive depth sensing approach that combines two cameras to analyze the disparity between the images to estimate how distant an object is. This process is similar to human vision as the brain uses binocular disparity to extract depth information from two-dimensional retinal images.

Time-of-Flight Cameras

ToF sensors use an active approach that measures the distance by targeting the artifacts with infrared light and calculating the time it takes to be reflected. As a result, the depth values can be visualized as a spatial image in the form of a depth map.

LiDAR

Similar to ToF, Light Detection and Ranging technology (LiDAR) emits pulses to calculate the depth based on the time the pulse is reflected, but in this case, it uses a powerful laser source that can extend its working range and provide high-resolution spatial data. This is why it is often used in autonomous systems such as self-driving cars to generate accurate point clouds that can be used to avoid pedestrians and other cars even when they are far away.

Comparison of 3D Sensing Technologies

| Feature | Stereo Camera | Time-of-Flight (ToF) | LiDAR |

|---|---|---|---|

| Working Distance | Short (≤2m) | Medium (0.4–5m) | Long (up to 100m+) |

| Accuracy | Moderate (5–10% of distance) | High (≤0.5% of distance) | Very High (mm-level) |

| Resolution | Medium | Low | High (point cloud) |

| Low-Light Performance | Poor | Good | Excellent |

| Bright-Light Performance | Moderate | Good | Excellent |

| Homogeneous Surfaces | Poor | Good | Excellent |

| Moving Objects | Good | Moderate | Excellent |

| Frame Rate | High | Variable | Very High (100+ Hz) |

| Power Consumption | High | Medium | High |

| Hardware Cost | Low | Medium | Expensive |

| Software Complexity | High | Low | Medium |

| Camera Size | Moderate | Good (compact) | Bulky |

| Use Environment | Needs ambient light | Indoor/Outdoor | All |

Each technology has its pros and cons, but in summary, LiDAR is best for long-range, high-accuracy applications, ToF cameras are best for mid-range use cases, with a good balance between accuracy and price, and stereo cameras are the most cost-effective, but struggle in low-light conditions, which is why they are often paired with active light methods to increase accuracy in challenging conditions. However, stereo vision is not the only option to perceive depth using passive light technologies; for example, it is possible to estimate depth with a monocular camera by using a method called structured light

The Mechanism Behind How Cameras Perceive Depth

Depth Perception with a Single Camera

Rather than using two lenses like stereo vision, it is also possible to estimate a depth map with a monocular system using a structured light setup in which one of the cameras is replaced with a stripe light projector that creates various stripe patterns, generating an artificial structure onto a surface. The distortion of the projected stripes on the object's surface is then used to determine the depth, resulting in more accurate close-range measurements. However, it is limited to stationary items since several images need to be acquired and processed to calculate the 3D information.

Additionally, there are also deep learning models that can generate a depth map from a single image, such as the Depth-Anything model, but these mechanisms are frequently less precise and require a high computational cost.

How Depth Sensing Works in Stereo Vision

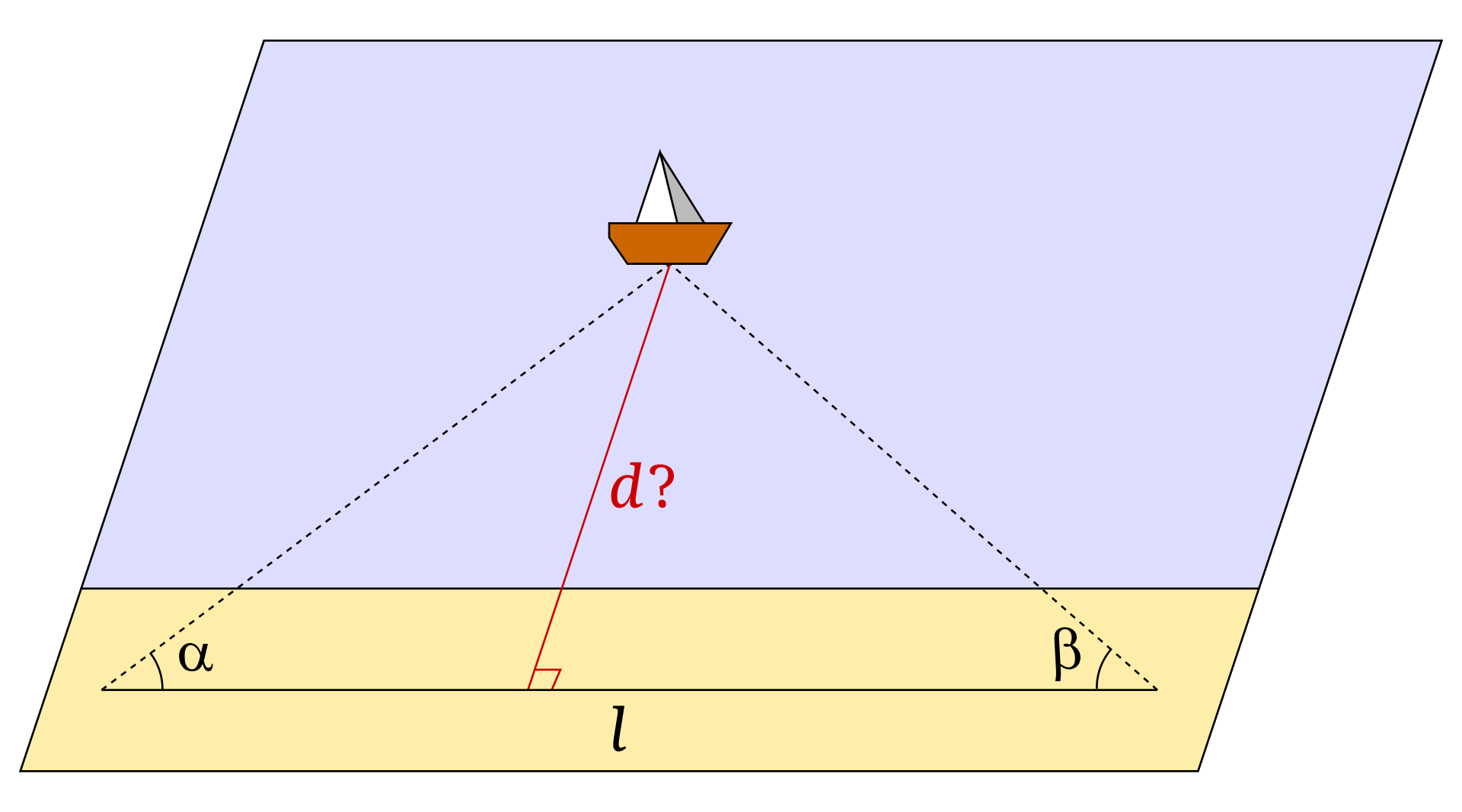

In stereo vision, depth is calculated using triangulation, which is the use of trigonometry to determine the position of points or distance measurements. In this way, triangulation can be used to calculate the distance of objects by measuring the stereo disparity.

What is Stereo Disparity?

Stereo disparity is the difference in position of the corresponding points in two images; in other words, the displacement of a point between two images. It allows to recover the 3D position of a point because disparity and depth are inversely related, which means that when an object is closer to the cameras, the disparity is larger because the viewing angles differ more significantly. On the other hand, structures that are farther away have a smaller disparity since the angles converge.

Stereo Disparity in Cameras

Stereoscopic systems operate by capturing images simultaneously with 2 monocular cameras placed in different positions. The stereo disparity can be found by matching the feature points from the left and right images of the stereoscopic pair, which allows to calculate a depth map of the photographed scene if the stereoscopic configuration has been previously calibrated, as the calculation of 3D data involves extrinsic parameters, which define the spatial relationship between the cameras, and intrinsic parameters, such as the focal length of each lens. These parameters form the basis of camera calibration, which ensures accurate alignment and depth measurement.

In this way, the depth can be calculated using the following formula:

where:

-

depth [mm] is the depth in millimeters

-

fx [px] is the focal length in pixels

-

baseline [mm] is the distance between the two cameras of the stereo camera pair

-

disparity [px] is the disparity in pixels

How Stereo Disparity Improves Accuracy

Stereo disparity improves depth estimation accuracy compared to monocular methods because it directly calculates depth rather than estimating it. Monocular cameras estimate depth using object size and intrinsic camera parameters, but these approaches are ambiguous because they are based on assumptions rather than accurate measurements. In contrast, stereoscopic systems calculate metric depth using disparity, which allows the exact distance to be calculated rather than estimated.

In addition, monocular methods often rely on deep learning models, such as the one mentioned above, making them prone to errors when the working environment differs from that portrayed in the dataset used to train the model. On the other hand, stereo depth perception is based on geometric principles, which guarantee accuracy in different scenarios.

How Active Stereo Enhances Depth Perception

However, stereo systems are not perfect as matching algorithms struggle to identify enough corresponding characteristics when surfaces lack detailed structure. This means that passive stereo systems, which depend on the available light and do not use any external light, require a good texture in the scene, so in surfaces with little to no texture, like a wall or the ground, the resulting depth map will be noisy.

These limitations can be overcome with active stereo, in which an external light source is employed to simplify the stereo matching problem. It consists of projecting dot patterns onto the surfaces using a laser projector. The introduced texture provides enough features for the matching algorithm to calculate depth more accurately, even in scenes with uniform or untextured surfaces.

The Role of Polarizers in Depth Imaging

Another con of stereoscopic cameras is that, as with untextured surfaces, intense direct light and scenarios with uneven lighting make the point matching process difficult. Reflections and shadows interfere with feature detection, thus decreasing the accuracy of the disparity calculation and causing errors in the depth results.

To mitigate these issues, stereo cameras can employ polarizing filters, which are optical filters that let light waves of a specific polarization pass through while blocking others. This way, imaging systems can reduce glare and enhance contrast, which results in better feature matching and more accurate depth estimation to unlock game-changing automations in any environment.

Applications of Stereo Cameras in Various Industries

Whether it's automotive solutions, automated manufacturing processes, or healthcare applications, many industries rely on stereo imaging for numerous operations.

Healthcare

Stereo vision is used in the healthcare industry for enhanced diagnostic imaging, patient monitoring, and even surgical assistance. For example, applications such as stereo endoscopy provide three-dimensional vision during surgery, ensuring safety in critical operations.

Retail & Logistics

From shelf monitoring and inventory management to checkout automation, stereo technology is utilized in retail to enhance customer engagement and operational efficiency.

Manufacturing

Industrial automation also benefits from vision systems that use depth perception as they improve quality inspection, product packaging, and even assembly lines in production.

Agriculture

Spatial sensing workflows are used to automate agricultural produce inspection, drones equipped with stereo cameras for crop monitoring, and autonomous mobile robots (AMR) with manipulators to automate harvesting. These machines employ techniques like Simultaneous Localization and Mapping (SLAM) to navigate and map environments without the use of GPS.

Augmented Reality

Depth cameras capture highly accurate dimensional data, enabling the easy measurement of objects of any size or shape. This information can be utilized in AR applications, such as games, virtual try-on experiences, interior design, and furniture placement.

Autonomous Vehicles

Stereo cameras are integrated into Advanced Driver Assistance Systems (ADAS) for accurate depth perception, which, when paired with computer vision and AI, enables the detection and avoidance of obstacles such as pedestrians and the monitoring of traffic, thereby improving the safety and efficiency of self-driving cars.

Underwater Exploration

Stereo vision is used in remotely operated vehicles (ROVs) and autonomous underwater vehicles (AUVs) for applications such as 3D reconstruction of underwater structures and species classification.

Although it is not as robust to the challenging lighting of subsea applications as a laser scanner or a sonar, it can provide color-enhancing feature detection in taxonomy tasks.

One Platform, Multiple Applications

Stereo camera systems are cost-effective solutions that enable accurate 3D perception in different environments. Furthermore, integrations like sensor fusion and AI capabilities expand the possibilities, demonstrating how versatile these systems can be. Their high level of flexibility allows them to be adapted to endless applications such as the ones mentioned above, among others, such as surveillance, facial authentication systems, wildlife monitoring, and, of course, machine vision in robotics.

Automation in Robotics with Stereo Vision

Robotic systems are widely used in automation pipelines as their ability to operate in challenging conditions and provide consistent performance makes them indispensable in modern industries. However, even simple tasks such as picking a component from a predefined position can become difficult and will often fail if the component is not precisely located and the robot lacks a vision system to capture its surroundings.

By evaluating the image data in real time, vision-driven robots can calculate deviations and use this information to correct their movements. Furthermore, perceiving depth enables robots to understand their workspace in three dimensions, allowing for more accurate navigation and trajectory planning, making robots move beyond rudimentary tasks to complex ones like autonomous navigation and manipulation. For example, for pick and place tasks, the machine vision system needs to be able to continuously capture its working space to identify each component, determine its exact 3D position, and transmit the data to the robot arm so it can grab it effectively.

Empowering the Industry with AI Vision

A stereo camera can provide raw depth data; however, pairing it with AI enables refining the stereo matching process, improving the accuracy of depth maps even in challenging scenarios such as environments with varying light. Furthermore, on-device computer vision capabilities are fundamental in applications that need to instantly interpret and represent the physical world, which is why AI-powered depth cameras are very popular in robotics applications such as factory automation.

Stereo cameras equip robots with depth perception that, paired with AI analysis, allows autonomous machines to understand their surroundings and make real-time decisions. This way, robots can adapt to dynamic settings and navigate even in busy scenarios such as warehouses, in which machines can plan the most efficient route and avoid collisions with human colleagues by running person detection and tracking algorithms.

Key Features of High-End Stereo Cameras

From warehouse robots to retail security, automation pipelines rely on cameras' precision. However, challenging factors like varying illumination, temperature, and range can limit their performance. So, how do modern cameras tackle these problems? This section will discuss the fundamental hardware specs that you should search for when choosing a stereo camera.

Just like regular cameras, stereo cameras have a resolution, which is the level of detail that the image sensor can provide, often expressed in megapixels, and a framerate, which is the number of frames captured by the camera in a second, ideally, both of these specifications should be high to achieve the best results.

For high precision tasks such as product inspection in electronic manufacturing, it is recommended to have a high resolution as it leads to more disparity levels in stereo vision systems. While a higher frame rate is desired in fast-imaging applications, for example, for tracking fast-moving objects in robotics. Similarly, if your application is intended to be used in high-speed scenarios, you should opt for a camera that features a global shutter, as a rolling shutter would introduce distortions.

The Importance of Wide-Angle and Long-Range Depth Sensing

Besides this, stereo cameras, and cameras in general, have a focal length which is directly proportional to the working range; hence, it should match the distance that will be used in the application. Lower focal length allows for farther viewing, but reduces the field of view, and a higher focal length provides a high field of view at near depth.

Unlike monocular cameras, stereo cameras also have a baseline, which is the distance between the two cameras measured along the horizontal axis. The baseline is directly proportional to the depth, so a larger baseline is more suited to capture distant objects but may complicate matching for closer ones. On the other hand, a shorter baseline allows for better close-range depth perception but reduces accuracy further away.

For example, long-range depth sensing is mandatory in autonomous vehicles as it allows for detecting distant objects and ensures safe navigation. Similarly, for surveillance and drone navigation, a wide-angle camera allows monitoring large areas, while a narrower field of view (FOV) would be more suited for precision applications such as biometric scanning for facial authentication systems. These devices use depth data to increase security, as it ensures that they can't be spoofed with a 2D photograph.

Adapting to Various Environments

Another relevant feature is the environmental robustness, as these cameras are often used in demanding industrial and outdoor applications. This explains why high-quality devices frequently feature industrial-grade enclosures and indicate the Ingress Protection (IP) rating, as these ensure protection from water and dust —a necessity in fields like robotics, where maintaining the durability of robots that operate in challenging conditions is crucial.

Advanced Features: Pushing the Boundaries of Depth Perception

Sensor Fusion in Stereo Vision

Those mentioned above are the fundamental specifications to consider first, however, stereo cameras go beyond basic monocular cameras, as they often integrate multiple sensors such as Inertial Measurement Units (IMU) to measure motion and orientation, thermal and IR sensors to detect heat, and even microphones to acquire audio for applications such as service robots.

Furthermore, stereo cameras are often paired with monocular RGB cameras to provide color, and even with technologies such as radar or LiDAR to increase depth measurement accuracy. These integrations enable sensor fusion, a process that combines data from multiple sensors to create a more reliable perception of the surroundings. The information from different sources can reduce uncertainty and improve the system's performance.

Combining Depth Perception with AI

Platforms like DepthAI integrate deep learning models to analyze stereo images along with data from different sensors to create enhanced depth maps that can be used in machine vision systems. This technology is particularly useful in fields like robotics and Augmented Reality systems, as it helps robots navigate and interact with their surroundings and enables AR devices to place virtual artifacts in real-world spaces.

Using Stereo Cameras for 3D Object Detection and Recognition

Spatial data paired with neural networks allows not only to detect, but also to recognize objects and track them more efficiently than with 2D images. AI models, such as YOLO or Stereo R-CNN, can process stereo images to detect and classify objects, utilizing depth information to minimize uncertainty and provide accurate 3D bounding boxes.

This makes it as important to choose a camera that can provide the processing power required to meet the demands of the application. Additionally, considerations like optimized power consumption and low-latency processing are fundamental as they guarantee to meet the high-performance demands of real-time applications while maintaining efficiency.

Integrating Stereo Cameras into Existing Systems

And last but not least, a highly relevant feature is how easy it is to integrate these cameras into our application. Luckily, modern solutions offer compatibility with a lot of languages and platforms by using industry-standard interfaces, APIs, and protocols to make their integration with existing systems simple. In addition, some of the most popular depth cameras have user-friendly and intuitive SDKs that deliver plug-and-play compatibility for quick deployment in diverse applications. Top contenders like ZED, Intel RealSense, and Luxonis OAK cameras offer ready-to-use hardware and software designed for a streamlined initial setup so users can test functionalities like running a pre-trained detection model out of the box. Moreover, they offer frameworks to quickly build or customize any vision-based application, for example, OAK cameras have predefined pipelines to train and deploy custom models that simplify the process of gathering and annotating data to apply custom solutions to any application. This scalability allows for instant evaluation and improvement for domain-specific developments.

The World’s Most Powerful Cameras for Depth and AI

With the previous features in mind, let's compare the most powerful 3D stereo cameras from some of the leading brands in the industry.

| Brand | OAK (Luxonis) | RealSense (Intel) | ZED (Stereolabs) | Basler |

|---|---|---|---|---|

| Model | D Pro (w/ IMX378) | D455 | 2i | rc_visard 65c |

| Resolution | RGB: 12MP / Depth: 1MP | RGB: 1MP / Depth: 0.9MP | RGB: 4K / Depth: 2K | RGB: 1.2MP / Depth: 1.2MP |

| Framerate (Max) | RGB: 60 fps / Depth: 120 fps | RGB: 30 fps / Depth: 90 fps | RGB: 60 fps / Depth: 100 fps | RGB: 25 fps / Depth: 25 fps |

| Focal Length | RGB: 4.81mm / Depth: 2.35mm | N/A | RGB: 2.1mm or 4mm options | RGB: 4 mm |

| FOV (H × V) | RGB: 66° × 54° / Depth: 80° × 55° | RGB: 90° × 66° / Depth: 87° × 58° | RGB: 110° × 70° (2.1mm) / Depth: 120° RGB: 72° × 44° (4mm) / Depth: 120° | RGB: x / Depth: 61° × 48° |

| Baseline | 120 mm | 75 mm | 95 mm | 65 mm |

| Ideal Depth Range | 0.7m – 12m | 0.6m to 6m, up to 20m (Max) | 0.3m to 12m (2.1mm) / 20m (Max) 1.5m to 20m (4mm) / 35m (Max) | 0.2 m – 1 m (Working Distance) |

| Depth Accuracy | <2% at 4m | <2% at 4 m | Translation: 0.35% Rotation: 0.005°/m | 0.04 mm at 0.2 m, 0.9 mm at 1 m |

| Shutter Type | RGB: Rolling / Depth: Global | Global | Rolling | Global |

| Polarizer | No option | No option | Option to include | No option |

| Robustness | IP66 | Indoor/Outdoor | IP66 | IP54 |

| Operating Temperature | −20°C to +50°C (RVC2) | N/A | −10°C to +50°C | 0° to 55°C |

| Sensor Integration | 12MP RGB (IMX378), Dual 1MP Stereo (OV9282), IMU (BNO085) | 1MP RGB, Dual Depth, IMU (BMI055) | Dual 4MP RGB, IMU, barometer, magnetometer, temperature | IMU |

| CV/AI Capabilities | 2D and 3D tracking, perception with filtering. Custom CV/AI functions | CV SDK integration | Neural Stereo Depth Sensing, Object Detection (with Jetson) | Visual odometry, SLAM, and other computer vision tasks |

| Processing Power | 4 TOPS (Myriad X VPU) | Intel RealSense Vision Processor D4 | Relies on the computing power of the connected device. It is optimized for NVIDIA GPUs | Nvidia Tegra K1 |

| Power Consumption | Up to 7.5W | N/A | 5V/380mA (1.9W) | 18–30 V |

| Interface Type | USB-C (USB 3.2 Gen1) | USB-C 3.1 Gen 1 | USB 3.1 Type-C | GigE |

| Compatibility | DepthAI API (Python, C++, ROS, various platforms) | RealSense SDK 2.0 (Windows, Linux, Android, macOS, ROS, OpenCV, etc.) | ZED SDK (Windows, Linux, Jetson L4T). Third-party integrations with Unity, ROS, MATLAB, Python and more |

Rest API, C, Python, .NET, OpenCV, Halcon, ROS, and more Seamless integration with third party software modules for robotics tasks |

| Price | $349 | $419 | $499 | $5k |

Each camera has unique strengths depending on the application, but based on the discussed features, we consider that the best use cases for each contender are:

-

Luxonis OAK-D Cameras: Best for mobile robots, as they offer onboard AI processing and reliable depth perception even in low-light conditions due to their active stereo technology. It is also the most beginner-friendly, making it a great option for developers to get started with.

-

Intel RealSense Cameras: Ideal for scenarios involving high-speed objects or a moving camera, as it features a global shutter in both the RGB and depth sensors. It also includes an onboard vision processor for real-time depth processing, allowing it to operate without a dedicated GPU or host processor.

-

Stereolabs ZED Cameras: Best for outdoor applications as it has a wide FOV lens and features long-distance sensing, they are also well suited for challenging environments as they include an IP66-rated enclosure and the option to add a polarizer to the lens to reduce glare in sunny settings.

-

Basler Stereo Cameras: Best for industrial automation as they offer GigE Vision cameras specifically designed for applications that require high-speed image acquisition, real-time data transfer, and are compatible with standard Ethernet infrastructure, making them easy to integrate into existing network setups. They also support third-party industry-specific integrations for tasks like bin picking and quality control. Basler products have proven their compatibility with multiple robotics solutions: KUKA, FANUC, Universal Robot, Denso, Techman, and many other robotics brands.

Frequently asked Questions

What is a stereo vision camera?

A stereo vision camera is a system that uses two imaging sensors, separated by a fixed distance, to calculate depth using triangulation by measuring the disparity between corresponding points in the images.

What is the difference between a camera and a stereo camera?

Monocular cameras estimate depth using object size and intrinsic camera parameters, while stereo systems use two cameras to measure depth by comparing disparities in images. This allows calculating the exact depth instead of estimating it.

What is the point of a stereo camera?

What is the difference between a stereo camera and a ToF camera?

What is the difference between LiDAR and a stereo depth camera?

How accurate is a stereo vision camera?

In which applications does stereo vision outperform ToF and LiDAR?

What are EyeSight stereo cameras?

What does SLAM stand for?

What are the types of SLAM?

SLAM can be categorized into three types according to the device employed to acquire the data that is used to build the map:

-

Visual SLAM (V-SLAM): Uses cameras (monocular, stereo, or RGB-D).

-

LiDAR SLAM: Uses laser scanners for precise depth.

-

Inertial SLAM: Combines cameras with IMUs (Inertial Measurement Units).

What is a stereo SLAM?

What is the role of scanning in SLAM?

In SLAM, different sensors are used to 3D scan areas, acquiring information that then goes through processes like feature extraction and pose estimation to generate detailed maps that are further optimized using techniques like loop closure and reprojection error minimization.

Leave A Reply